What WASAPI does is provide an exclusive, direct path to the soundcard, which allows for the computer to output the correct sample rate that matches the music. If you start the game, for instance, after you start the WASAPI player, no game sound will appear since the audio path is dedicated to the player only (and therefore is at the correct. WASAPI can be bit perfect and even in shared mode it uses high quality resampler. Generally speaking though, when it comes to pure quality, it can't get any better than what exclusive WASAPI can offer. Not entirely sure how Direct Sound is handled in Windows 10 but it's either equal to WASAPI shared mode or worse. DAC: Topping E30. In fact since Windows Vista onward, those APIs Winamp is using (such as DirectSound, WaveOut) are emulated in software mixer for compatibility purposes further distancing the audio path from the hardware. Modern audio players support modern WASAPI audio output for direct access to the audio hardware (especially in WASAPI exclusive mode).

- WASAPI's exclusive mode for rendering audio is a native way on Windows to render audio undisturbed, similar to Steinberg's 'Audio Stream Input/Output' (ASIO). The YASAPI/NT output plugin may serve as a replacement for any other Winamp output plugin.

- Winamp is a free multimedia player made by Nullsoft. It supports numerous audio and video formats. It also plays streamed video and audio content, both live and recorded, authored worldwide.

The Windows Audio Session API (WASAPI) enables client applications to manage the flow of audio data between the application and an audio endpoint device.

Header files Audioclient.h and Audiopolicy.h define the WASAPI interfaces.

Every audio stream is a member of an audio session. Through the session abstraction, a WASAPI client can identify an audio stream as a member of a group of related audio streams. The system can manage all of the streams in the session as a single unit.

The audio engine is the user-mode audio component through which applications share access to an audio endpoint device. The audio engine transports audio data between an endpoint buffer and an endpoint device. To play an audio stream through a rendering endpoint device, an application periodically writes audio data to a rendering endpoint buffer. The audio engine mixes the streams from the various applications. To record an audio stream from a capture endpoint device, an application periodically reads audio data from a capture endpoint buffer.

WASAPI consists of several interfaces. The first of these is the IAudioClient interface. To access the WASAPI interfaces, a client first obtains a reference to the IAudioClient interface of an audio endpoint device by calling the IMMDevice::Activate method with parameter iid set to REFIID IID_IAudioClient. The client calls the IAudioClient::Initialize method to initialize a stream on an endpoint device. After initializing a stream, the client can obtain references to the other WASAPI interfaces by calling the IAudioClient::GetService method.

Many of the methods in WASAPI return error code AUDCLNT_E_DEVICE_INVALIDATED if the audio endpoint device that a client application is using becomes invalid. Frequently, the application can recover from this error. For more information, see Recovering from an Invalid-Device Error.

WASAPI implements the following interfaces.

| Interface | Description |

|---|---|

| IAudioCaptureClient | Enables a client to read input data from a capture endpoint buffer. |

| IAudioClient | Enables a client to create and initialize an audio stream between an audio application and the audio engine or the hardware buffer of an audio endpoint device. |

| IAudioClock | Enables a client to monitor a stream's data rate and the current position in the stream. |

| IAudioRenderClient | Enables a client to write output data to a rendering endpoint buffer. |

| IAudioSessionControl | Enables a client to configure the control parameters for an audio session and to monitor events in the session. |

| IAudioSessionManager | Enables a client to access the session controls and volume controls for both cross-process and process-specific audio sessions. |

| IAudioStreamVolume | Enables a client to control and monitor the volume levels for all of the channels in an audio stream. |

| IChannelAudioVolume | Enables a client to control the volume levels for all of the channels in the audio session that the stream belongs to. |

| ISimpleAudioVolume | Enables a client to control the master volume level of an audio session. |

WASAPI clients that require notification of session-related events should implement the following interface.

| Interface | Description |

|---|---|

| IAudioSessionEvents | Provides notifications of session-related events such as changes in the volume level, display name, and session state. |

Related topics

[This tutorial applies to Windows Vista and later versions only]

Starting from Windows Vista, Microsoft has rewritten the multimedia sub-system of the Windows operating system from the ground-up; at the same time Microsoft introduced a new API, also known as Core audio API, which allows interacting with the multimedia sub-system and with audio endpoint devices (sound cards).

The Core Audio APIs implemented in Windows Vista and higher versions are the following:

• Multimedia Device (MMDevice) API. Clients use this API to enumerate the audio endpoint devices in the system.

• DeviceTopology API. Clients use this API to directly access the topological features (for example, volume controls and multiplexers) that lie along the data paths inside hardware devices in audio adapters.

• EndpointVolume API. Clients use this API to directly access the volume controls on audio endpoint devices. This API is primarily used by applications that manage exclusive-mode audio streams.

• Windows Audio Session API (WASAPI). Clients use this API to create and manage audio streams to and from audio endpoint devices.

All of the mentioned stuffs, with the exception of WASAPI, are accessible through the CoreAudioDevicesMan class accessible through the CoreAudioDevices property as described inside the tutorial How to access settings of audio devices in Windows Vista and later versions.

In general, WASAPI operates in two modes:

| • | In exclusive mode (also called DMA mode), unmixed audio streams are rendered directly to the audio adapter and no other application's audio will play and signal processing has no effect. Exclusive mode is useful for applications that demand the least amount of intermediate processing of the audio data or those that want to output compressed audio data such as Dolby Digital, DTS or WMA Pro over S/PDIF. |

| • | In shared mode, audio streams are rendered by the application and optionally applied per-stream audio effects known as Local Effects (LFX) (such as per-session volume control). Then the streams are mixed by the global audio engine, where a set of global audio effects (GFX) may be applied. Finally, they're rendered on the audio device. Differently from Windows XP and older versions, there is no more direct path from DirectSound to the audio drivers, indeed DirectSound and MME are totally emulated through WASAPI working in shared mode, which results in pre-mixed PCM audio that is sent to the driver in a single format (in terms of sample rate, bit depth and channel count). This format is configurable by the end user through the 'Advanced' tab of the Sounds applet of the Control Panel as seen on the picture below: |

In order to enable the usage of WASAPI you must call the InitDriversType method with the nDriverType parameter set to DRIVER_TYPE_WASAPI. The call to the InitDriversType method is mandatory before performing calls to the InitRecordingSystem, GetInputDevicesCount and GetInputDeviceDesc methods: if the InitDriversType method should be called at a later time, it would report back an error; if for any reason you should need calling it at a later time, you would need performing the following sequence of calls:

1. ResetEngine method

2. InitDriversType method

3. Eventual new enumeration of input devices through the GetInputDevicesCount and GetInputDeviceDesc methods.

4. ResetControl method

Important note Differently from usage of DirectSound and ASIO drivers, when using WASAPI drivers there is no need to perform a reset of the engine when an audio device is added or removed from the system: new calls to the WASAPI.DeviceGetCount and WASAPI.DeviceGetDesc methods will report the change. |

After initializing the usage of WASAPI through the InitDriversType method, Audio Sound Recorder for .NET gives access to WASAPI itself through the WASAPIMan class accessible through the WASAPI property.

WASAPI can manage three different types of devices:

| • | Render devices are playback devices where audio data flows from the application to the audio endpoint device, which renders the audio stream. |

| • | Capture devices are recording devices where audio data flows from the audio endpoint device, that captures the audio stream, to the application. |

| • | Loopback devices are recording devices that capture the mixing of all of the audio streams being rendered by a specific render device, also if audio streams are being played by third-party multimedia application like Windows Media Player: each render device always has a corresponding loopback device. |

Available WASAPI devices can be enumerated through the WASAPI.DeviceGetCount and WASAPI.DeviceGetDesc methods: if you only need enumerating input devices you can also use the GetInputDevicesCount and GetInputDeviceDesc methods. In both cases, only devices reported as 'Enabled' by the system will be listed: unplugged or disabled devices will not be enumerated.

Before using an input device for recording or an output device for playback, starting the device itself is a mandatory operation: for exclusive mode you can use the WASAPI.DeviceStartExclusive method while for shared mode you can use the WASAPI.DeviceStartShared method. In both cases the started device can be stopped through the WASAPI.DeviceStop method. You can check if a device is already started through the WASAPI.DeviceIsStarted method.

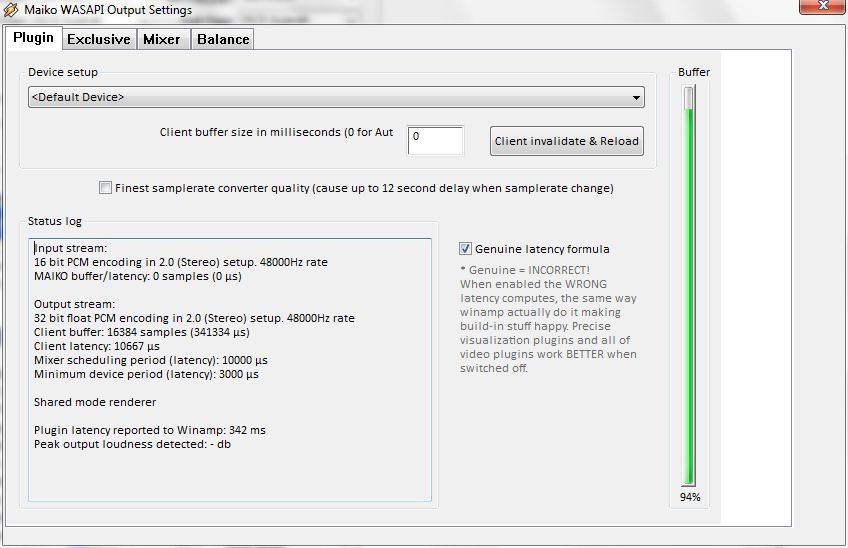

Winamp Wasapi Output Plugin

It's important to note that, also if the output device and the input device are both located on the same physical device, the system will see them as different entities so you will have to start them as separate devices using two calls to the DeviceStartExclusive or WASAPI.DeviceStartShared methods.

For exclusive mode you need to start the device by specifying, inside the call to the WASAPI.DeviceStartExclusive method, the playback format which is represented by the frequency and number of channels: you can know if a WASAPI device supports a specific format through the WASAPI.DeviceIsFormatSupported method.

For shared mode you directly rely on the playback format chosen from the Sound applet of the Windows control panel: you can know which is the current format through the WASAPI.DeviceSharedFormatGet method.

Now, once the capture and loopback devices needed for recording have been started, we have two options:

Wasapi For Winamp

| • | Start a recording session from a single input device |

In order to start a recording session from a capture device you can use the StartFromWasapiCaptureDevice method.

Through WASAPI loopback it's also possible capturing the audio stream that is being played by a rendering endpoint device, also if the stream is being played by other external applications, for example by Windows Media Player or WinAmp: in order to start a recording session from a loopback device you can use the StartFromWasapiLoopbackDevice method.

As seen for other recording start methods described inside the How to perform a recording session tutorial, the recorded stream will be stored into an output file or into a memory buffer.

| • | Start a recording session from multiple input devices at the same time and have the streams mixed into one single output file |

This solution allows getting various audio streams, incoming from different input devices (both capture and loopback devices), and to pass their contents to the WASAPI mixer that will combine them together in order to have in output a single audio stream, allowing to store the result into a single output file or into a memory buffer.

In order to achieve this feature, after having started the input devices you want to record from, you need to attach them to the WASAPI mixer through separate calls to the WASAPI.MixerInputDeviceAttach method (one call for each input device): once all of the input devices of interest have been attached, you can start the recording session through the StartFromWasapiMixer method.

It' important to note that, once the recording session has been started, you cannot attach further input devices until the recording session has been stopped through the Stop method. After stopping the recording session and before starting a new one, you may eventually detach one or more input devices through the WASAPI.MixerInputDeviceDetach method; you can know if a specific input device is already attached to the mixer using the WASAPI.MixerInputDeviceIsAttached method and you can enumerate attached input devices through the combination of the WASAPI.MixerInputDeviceAttachedCountGet and WASAPI.MixerInputDeviceAttachedInfoGet methods.

Although it would be possible to detach a specific input device from the WASAPI mixer during a recording session, as mentioned before you would not be able to attach it back again while the recording session is in place: for this reason, if you should need to temporarily 'disable' a specific input device, it would be convenient setting its volume to 0 using the WASAPI.DeviceVolumeSet method and, when needed again, to 'enable' back the specific input device by reapplying its original volume; further information about volume settings are described inside the next paragraph.

WASAPI clients can individually control the volume level of each audio session. WASAPI applies the volume setting for a session uniformly to all of the streams in the session; you can modify the session volume through the WASAPI.DeviceVolumeSet method and to retrieve the current volume through the WASAPI.DeviceVolumeGet method. In case you should need to get/set the master volume for the given WASAPI device, shared by all running processes, you should use the CoreAudioDevices.MasterVolumeGet / CoreAudioDevices.MasterVolumeSet methods.

This latest topic brings to mind an important issue: while the list of CoreAudio devices, depending upon the value of the nStateMask parameter of the CoreAudioDevices.Enum method, may not contain unplugged or disabled devices, the list of WASAPI devices will always contain all of the devices installed inside the system, also if currently unplugged or disabled: in order to know the one-to-one correspondence between a specific WASAPI device and a specific CoreAudio device, which may be listed at different positions inside the respective lists, you should use the WASAPI.DeviceCoreAudioIndexGet method: with this method, given the zero-based index of the device inside the list of WASAPI devices, you could be informed about the corresponding zero-based index of the same physical device inside the list of CoreAudio devices.

Wasapi Install

The configuration of audio devices is not static: for example when dealing with USB connected audio devices the configuration may change when a USB device is plugged/unplugged or when Microphones or Line-In are physically inserted or removed from respective connectors. When the configuration of audio devices changes, it could be needed to enumerate again the available input devices and to properly assign them to the instanced players by resetting the multimedia engine and the control as described at the beginning of this tutorial.

In case a USB audio device should be added to the system, for example when an USB sound card is installed for the first time, the container application can be informed in real time by catching the CoreAudioDeviceAdded event.

When a device has been added to the system, it may still be reported as 'Disabled' due to the jack-sensing feature that keeps a device disabled until the microphone is not physically plugged into the sound card; when the audio device is set as 'Enabled' or 'Disabled', one or more CoreAudioDeviceStateChange events could be generated.

If the USB audio device should be uninstalled from the system, by physically removing the audio device and by uninstalling its driver from the system, the CoreAudioDeviceRemoved event would be generated.

Maiko Wasapi Winamp Plugin Download

As mentioned, you could receive more than one CoreAudioDeviceStateChange event at the same time so, when eventually starting the reset procedure, you should ignore CoreAudioDeviceStateChange events after the first one.

Wasapi Driver Download

If supported by the driver of the sound card, through WASAPI is also possible emulating the 'Listen to this device' feature (see the screenshot below) available inside the 'Listen' tab of the 'Sound' applet of the Windows control panel:

The 'Listen to this device' feature allows passing the audio incoming from the microphone through the PC speakers directly or, in alternative, you may connect your MP3 player (iPod, mobile phone, etc.) to the Line-In port (using the jack contact), and hear the music again through the PC speakers. Acer 4250s driver download.

This feature can be emulated by invoking the WASAPI.ListenInputDeviceStart method, which receives the index of the capture device and of the render device,and can be stopped at a later time through the WASAPI.ListenInputDeviceStop method.

Winamp Wasapi Plugin

Samples of usage of WASAPI in Visual C#.NET and Visual Basic.NET can be found inside the following samples installed with the product's setup package:

- WasapiRecorder

- WasapiInputMixerRecorder